SINDy¶

For users already familiar with the interface, see the API docs.

SINDy stands for Sparse Identification of Nonlinear Dynamics [1]. It is a data-driven method for discovering dynamical systems models from measurement data using sparse regression.

Main ideas¶

Suppose we have a set of measurements \(x(t)\in \mathbb{R}^n\) from some physical system at different points in time \(t\). SINDy attempts to represent the time evolution of \(x(t)\) in terms of a nonlinear function \(f\):

This equation constitutes a dynamical system for the measurements \(x(t)\). The vector \(x(t)=[x_1(t), x_2(t), \dots x_n(t)]^\top\) gives the state of the physical system at time \(t\). The function \(f(x(t))\) constrains how the system evolves in time.

The key idea behind SINDy is that the function \(f\) is often sparse in the space of an appropriate set of basis functions. For example, the function

is sparse with respect to the set of polynomials of two variables in the sense that if we were to write an expansion of the component functions of \(f\) in this basis (e.g. \(f_{1}(x) = \sum_{i=0}^\infty\sum_{j=0}^\infty a_{i,j}x_1^ix_2^j\)), only a small number of coefficients (\(a_{i,j}\)) would be nonzero.

SINDy employs sparse regression to find a linear combination of basis functions that best capture the dynamic behavior of the physical system.

Approximation problem¶

To apply SINDy in practice one needs a set of measurement data collected at times \(t_1, t_2, \dots, t_n\), and the time derivatives of these measurements (either measured directly or numerically approximated). These data are aggregated into the matrices \(X\) and \(\dot X\), respectively

Next, one forms a library matrix \(\Theta(X)\) whose columns consist of a chosen set of basis functions applied to the data

For example, if \(\theta_1(x), \theta_2(x), \dots, \theta_\ell(x)\) are monomials (\(\theta_i(x) = x^{i-1}\)), then

where vector products and powers are understood to be element-wise.

We seek a set of sparse coefficient vectors (collected into a matrix)

The vector \(\xi_i\) provides the coefficients for a linear combination of basis functions \(\theta_1(x), \theta_2(x), \dots, \theta_\ell(x)\) representing the \(i\)th component function of \(f\): \(f_i(x)\). That is to say, \(f_i(x) = \Theta\left(x^\top\right) \xi_i\), where \(\Theta\left(x^\top\right)\) is understood to be a row vector consisting of symbolic functions (whereas \(\Theta(X)\) is a matrix whose entries are numerical values).

With each of the objects \(X\), \(\dot X\), \(\Theta(X)\), and \(\Xi\) being defined, we are ready to write down the approximation problem underlying SINDy:

Deeptime SINDy objects¶

Deeptime has two SINDy objects:

SINDy is used to solve the above optimization problem given \(\Theta\), \(X\), and (optionally) \(\dot{X}\). By default \(\Theta\) is a polynomial library of degree 2 and the sequentially-thresholded least-squares algorithm [1] is used to solve the optimization problem. If \(\dot{X}\) is not passed in, then it is estimated from \(X\) with a first order finite difference method.

SINDyModel represents the learned dynamical system. It can be used to predict derivatives given state variables, simulate forward in time from novel initial conditions, and to score itself against ground truth data.

Let’s see how these objects work with an example.

Example¶

Suppose we have measurements of the position of a particle obeying the following dynamical system at different points in time:

Note that this system of differential equations decouples into two differential equations whose solutions are simply \(x(t) = x_0e^{-2t}\) and \(y(t) = y_0e^t\), where \(x_0 = x(0)\) and \(y_0=y(0)\) are the initial conditions.

Using the initial conditions \(x_0 = 3\) and \(y_0 = \tfrac{1}{2}\), we construct the data matrix \(X\).

[1]:

import numpy as np

[2]:

t = np.linspace(0, 1, 100)

x = 3 * np.exp(-2 * t)

y = 0.5 * np.exp(t)

X = np.stack((x, y), axis=-1) # First column is x, second is y

To form a SINDy estimator we can select a feature library and an optimization routine.

Let’s use polynomials of degree up to three for the candidate library \(\Theta\) and sequentially-thresholded least-squares with a threshold of 0.2 to solve the optimization problem

[3]:

from sklearn.preprocessing import PolynomialFeatures

from deeptime.sindy import STLSQ

library = PolynomialFeatures(degree=3)

optimizer = STLSQ(threshold=0.2)

Following the scikit-learn workflow, we first instantiate a SINDy estimator with the desired properties, then fit it to the data in separate step.

[4]:

from deeptime.sindy import SINDy

# Instantiate the estimator

estimator = SINDy(

library=library,

optimizer=optimizer,

input_features=["x", "y"] # The feature names are just for printing

)

# Fit the estimator to data

estimator.fit(X, t=t);

Once the estimator has been fit, we extract the learned model.

[5]:

model = estimator.fetch_model();

We can inspect the governing equations discovered by SINDy and check whether they seem reasonable with the print function.

[6]:

model.print()

x' = -1.999 x

y' = 0.999 y

The learned model is very close to the true governing equations!

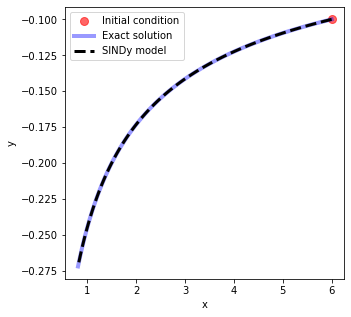

We can use it to evolve new initial conditions forward in time with the simulate method.

[7]:

x0 = 6

y0 = -0.1

t_test = np.linspace(0, 1, 100)

x_test = x0 * np.exp(-2 * t_test)

y_test = y0 * np.exp(t_test)

sim = model.simulate([x0, y0], t=t_test)

[8]:

import matplotlib.pyplot as plt

fig, ax = plt.subplots(1, 1, figsize=(5, 5))

ax.plot(x0, y0, "ro", label="Initial condition", alpha=0.6, markersize=8)

ax.plot(x_test, y_test, "b", label="Exact solution", alpha=0.4, linewidth=4)

ax.plot(sim[:, 0], sim[:, 1], "k--", label="SINDy model", linewidth=3)

ax.set(xlabel="x", ylabel="y")

ax.legend();

For more fine-grained control over various aspects of SINDy (e.g. different optimizers or feature libraries), the PySINDy package provides Deeptime API-compatible objects.

References